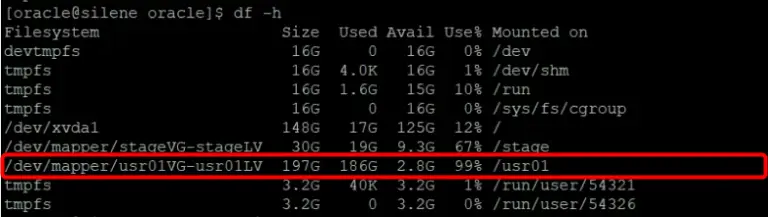

Is your Oracle ODI environment running out of storage space? You’re not alone. Many organizations face this critical challenge, but there’s a systematic way to solve it.

In this guide, I’ll share practical solutions from my recent experience fixing a production environment that had reached 99% storage utilization. You’ll learn exactly how to identify, resolve, and prevent ODI storage issues.

Page Contents

ToggleWhy Storage Management Matters in Oracle ODI

Storage issues in ODI environments can sneak up on you. When left unchecked, they can lead to:

- Failed data integrations due to insufficient temporary space

- System crashes from inability to write log files

- Corrupted backups from incomplete writes

- Slower overall system performance

Before diving into solutions, let’s understand how to check your current storage status. Here’s what you need to know:

Your ODI environment needs at least 20% free space for stable operation. Anything less requires immediate attention.

Here’s how to check your storage status:

Watch for these warning signs:

- Usage above 80%

- Rapidly decreasing free space

- Large temporary file accumulation

The Oracle ODI storage structure is divided into three main areas, each with its own distinct purpose and characteristics.

1. RI Stage Area

First, let’s talk about the RI Stage Area. This is where most of your backup data lives. It typically takes up more than 20GB, with system backups being the biggest space consumers. It also houses ODI administrator backups and keeps track of your configuration files and logs. Think of it as your system’s safety deposit box – it’s where all the critical backup data accumulates over time, making it one of the fastest-growing areas in terms of storage consumption.

2. RDE Area

Then we have the RDE Area, which is essentially your system’s active workspace. This is where the middleware files do their daily work, some backup data is stored, and all your Java-related files reside. It’s like the system’s office space – it’s where the actual work happens, so it needs regular housekeeping to stay efficient.

3. OBIEE Application Storage

Finally, there’s the OBIEE Application Storage, which is usually the biggest storage consumer of all. The middleware files alone can take up more than 80GB. It stores different versions of installations and maintains temporary cache files. Think of it as your system’s warehouse – it’s massive, constantly active, and needs careful monitoring because it directly impacts your system’s performance.

Each of these areas plays a crucial role in the overall system stability. Understanding their unique characteristics helps you develop the right management strategy – it’s not just about clearing space, but knowing where and how to maintain it effectively.

The key is to remember that these aren’t just separate storage spaces; they’re interconnected parts of your system’s ecosystem. When one area starts having issues, it can quickly affect the others, which is why a comprehensive management approach is so important.

Step-by-Step Storage Optimization

When it comes to optimizing Oracle ODI storage, we follow a three-phase approach that’s both systematic and practical.

Phase 1: Problem Area Analysis

Let’s start with Phase 1: Problem Area Analysis. When you’re dealing with storage issues, your first priority is finding out exactly what’s taking up all that space. We use two simple but powerful commands for this. First, we look for large log files that are over a month old – these are often forgotten and can eat up a lot of space. Then we identify the heaviest directories in your middleware folder, giving us a clear picture of where the bulk of your storage is being used.

1. Identify large files:

find /usr01 -name "*.log" -type f -mtime +30 -ls | sort -k7 | head -20

2. Find storage-heavy directories:

du -h --max-depth=3 /usr01/oracle/Middleware | sort -rh | head -10

Phase 2: Implement Regular Maintenance

Moving on to Phase 2: Regular Maintenance. This is where automation becomes your best friend. We’ve developed a comprehensive script that takes care of log management automatically. It’s pretty smart about how it works – first checking if the target directory exists (because you don’t want errors if paths change), then handling both your .out and .log files. The script compresses logs older than 90 days and removes any compressed logs that are over a year old. The beauty of this approach is that you can set it to run automatically every night at 2 AM using crontab, so you don’t have to think about it.

#!/bin/bash # Oracle ODI Storage Management Automation Script # This script automates log compression and deletion. TARGET_DIR="/usr01/oracle/Middleware/logs" # Check directory existence if [ ! -d "$TARGET_DIR" ]; then echo "Error: Directory $TARGET_DIR does not exist" exit 1 fi cd "$TARGET_DIR" || exit 1 echo "Working in directory: $TARGET_DIR" # Compress logs older than 90 days find "$TARGET_DIR" -name "*.out[0-9]*" -type f -mtime +90 -exec gzip {} \; find "$TARGET_DIR" -name "*.log[0-9]*" -type f -mtime +90 -exec gzip {} \; # Delete compressed logs older than 1 year find "$TARGET_DIR" -name "*.out[0-9]*.gz" -type f -mtime +365 -delete find "$TARGET_DIR" -name "*.log[0-9]*.gz" -type f -mtime +365 -delete echo "Cleanup completed in $TARGET_DIR"

This script offers several key features:

- Directory existence validation

- Both .out and .log file handling

- Safe directory navigation

- Clear execution status messages

To automate this script, add it to your crontab:

# Run at 2 AM every day 0 2 * * * /path/to/odi_storage_cleanup.sh

Phase 3: Establish Storage Policies

Finally, Phase 3 is all about establishing clear storage policies. Think of this as setting up rules for your system’s housekeeping. We break it down into three main areas:

Set these key policies:

- Backup Management

We keep things lean by only retaining 30 days of backups. Anything older than 7 days gets compressed, and we make sure to store these backups on a separate volume for safety.

# Clean backup files older than 30 days

find /*/backup -name "*.bak" -mtime +30 -delete

find /*/backup -name "*.backup" -mtime +30 -delete

- Log Rotation

- Compress logs older than 30 days

- Delete logs older than 90 days

- Monitor log growth weekly

# Compress logs older than 30 days

find /*/logs -name "*.log" -mtime +30 -exec gzip {} \;

# Delete compressed logs older than 90 days

find /*/logs -name "*.gz" -mtime +90 -delete

- Temporary File Cleanup

- Clear staging files after successful loads

- Remove temp files older than 7 days

- Set size limits for temp directories

# Remove temporary files older than 30 days

find /stage -type f -mtime +30 -delete

Each of these policies comes with its own cleanup commands, but the key is understanding why we do each step. It’s not just about freeing up space – it’s about maintaining a healthy, efficient system that can handle your workload without running into storage issues.

The best part about this approach is that once you set it up, most of it runs automatically. You just need to monitor and adjust based on your specific needs. Remember, these aren’t just random commands – they’re part of a well-thought-out strategy to keep your Oracle ODI environment running smoothly.

Pro Tips for Long-term Success

Here are some battle-tested recommendations:

Common Mistakes to Avoid

Don’t fall into these common traps:

- Ignoring Temporary Files These can accumulate quickly during data loads.

- Keeping Too Many Backups Only retain what your recovery policy requires.

- Setting and Forgetting Regular monitoring prevents emergency situations.

When to Seek Help

Consider getting expert help if you:

- Consistently run above 90% storage

- Experience frequent space issues

- Need to scale your environment

Conclusion

Proper storage management in Oracle ODI requires both immediate action and long-term planning. By following this guide, you can:

Have questions about managing your ODI storage? Drop them in the comments below!