Oracle Internet Directory Scaling Part 3: WebLogic & JVM Optimization

This article is Part 3 of the “Oracle Internet Directory Scaling” series.

The previous entry, Part 2: Effective Implementation Strategies for Enterprises, covers effective implementation strategies to scale Oracle Internet Directory (OID) for enterprise success. If you haven’t read it yet, be sure to check it out first.

Table of Contents

ToggleIntroduction: The Foundation of OID Performance

Think of Oracle Internet Directory as a grand orchestra performing at an enterprise scale. Just as a symphony requires perfect coordination between musicians and their conductor, OID needs careful harmonization of its WebLogic Server and JVM components to achieve peak performance.

WebLogic Server acts as our conductor, coordinating all the different parts of the system. The JVM, on the other hand, provides our concert hall – the environment where everything takes place. When these components work in harmony, your OID system can handle enterprise workloads with grace and efficiency.

In this third part of our scaling series, we’ll explore how organizations have successfully optimized these critical components. We’ll examine real-world examples that demonstrate how proper configuration can transform system performance from a struggling ensemble into a well-orchestrated symphony.

WebLogic Configuration: The System's Command Center

Thread Pool Management: Your System's Workforce

Let’s examine how a global financial services company transformed their OID performance. Their initial situation will likely feel familiar to many organizations facing scaling challenges. With 50,000 users globally and peak loads of 15,000 concurrent authentication requests, their system struggled with response times averaging 2.3 seconds and frequent timeouts during Asian market hours.

Their initial thread pool configuration looked like this:

<thread-pool> <min-threads-constraint>20</min-threads-constraint> <max-threads-constraint>200</max-threads-constraint> <queue-size>500</queue-size> </thread-pool>

Think of this configuration as trying to serve a grand banquet with too few waiters and a limited waiting area. Just as this would create bottlenecks in service, their system experienced similar constraints. Through careful analysis, they developed an optimized configuration:

Through careful analysis and testing, they implemented this optimized configuration:

<thread-pool> <min-threads-constraint>50</min-threads-constraint> <max-threads-constraint>400</max-threads-constraint> <queue-size>-1</queue-size> <stuck-thread-max-time>600</stuck-thread-max-time> </thread-pool>

Let’s understand each component of this optimized thread pool configuration and why it matters for your system’s performance.

Understanding Minimum Threads (50)

Think of minimum threads as your core team of workers who are always ready to serve. Setting this to 50 threads was a carefully calculated decision:

<min-threads-constraint>50</min-threads-constraint>

This setting ensures that your system always has enough capacity to handle normal operations, much like a restaurant maintaining adequate staff during regular business hours. The number 50 wasn’t chosen arbitrarily – it came from analyzing their base load, which required at least 30 threads, plus additional headroom for sudden spikes in traffic.

Maximum Threads (400)

The maximum thread setting represents your total workforce capacity:

<max-threads-constraint>400</max-threads-constraint>

This is like having the ability to call in additional staff during peak hours. The number 400 was determined by careful consideration of:

- Server CPU cores (typically 8-16 in production environments)

- Memory available per thread (approximately 512KB to 1MB)

- Expected concurrent operation patterns during peak times

Just as a restaurant needs to balance staff numbers with available kitchen space and serving areas, we need to balance thread count with available system resources.

Queue Size and Stuck Thread Detection

<queue-size>-1</queue-size> <stuck-thread-max-time>600</stuck-thread-max-time>

The unlimited queue size (-1) acts like an infinite waiting area for incoming requests. Rather than turning customers away during busy periods, requests wait in line until they can be processed. The stuck thread detection time of 600 seconds serves as a safety net, identifying any operations that might be taking too long to complete.

Impact of Thread Pool Optimization

The transformation in system performance was remarkable. Let’s examine how each optimization affected the system, much like observing how a well-staffed restaurant improves its service efficiency.

With the optimized thread pool configuration, the system achieved:

- Base load responsiveness improved dramatically. Just as having enough core staff ensures quick service during regular hours, the increased minimum thread count of 50 reduced initial response times by 45%.

- Peak load handling became smooth and efficient. The expanded maximum thread capacity of 400 meant the system could handle 15,000 concurrent authentication requests without breaking a sweat, similar to a restaurant smoothly handling both regular diners and large party bookings simultaneously.

- The unlimited queue size eliminated request rejections during traffic spikes. Think of this as having an efficiently managed waiting area where customers know they’ll eventually be served, rather than being turned away during busy periods.

Work Manager Configuration

Building on our thread pool success, let’s explore how Work Manager helped prioritize different types of requests. Consider how a well-run emergency room handles patients – some cases need immediate attention while others can wait. Here’s how a major retail organization implemented this during their peak shopping season:

<work-manager> <name>OIDWorkManager</name> <!-- Critical operations configuration --> <min-threads-constraint> <name>Critical-Min</name> <count>25</count> <pool-name>PriorityPool</pool-name> </min-threads-constraint> <!-- Overall capacity control --> <max-threads-constraint> <name>OID-Max</name> <count>400</count> </max-threads-constraint> <!-- Workload prioritization --> <fair-share-request-class> <name>Critical-Ops</name> <fair-share>80</fair-share> </fair-share-request-class> <fair-share-request-class> <name>Routine-Ops</name> <fair-share>20</fair-share> </fair-share-request-class> </work-manager>

Understanding Work Manager Configuration

Just as an emergency room needs a sophisticated system to handle both critical cases and routine care, our Work Manager configuration creates an intelligent system for managing different types of requests. Let’s understand how each component works together to ensure optimal request handling.

The Critical Operations configuration ensures that important operations always have resources available:

<min-threads-constraint> <name>Critical-Min</name> <count>25</count> <pool-name>PriorityPool</pool-name> </min-threads-constraint>

Think of this as having dedicated emergency room staff that’s always ready for critical cases. By reserving 25 threads specifically for critical operations, we ensure that essential tasks never wait for resources, even during peak times.

The workload prioritization system divides resources intelligently between critical and routine operations:

<fair-share-request-class> <name>Critical-Ops</name> <fair-share>80</fair-share> </fair-share-request-class> <fair-share-request-class> <name>Routine-Ops</name> <fair-share>20</fair-share> </fair-share-request-class>

This 80/20 split ensures that critical operations receive preferential treatment while still maintaining service for routine tasks. It’s similar to how an emergency room might dedicate 80% of its resources to urgent cases while maintaining 20% for routine care. This balance ensures no type of request is completely starved of resources.

The results of this intelligent prioritization were remarkable:

- Authentication response times improved by 85%

- Critical operations met their SLA 99.9% of the time

- During Black Friday peak loads, the system maintained zero timeout errors

- Resource utilization remained balanced across all operation types

JVM Optimization: Memory Management Mastery

Imagine your JVM as a grand concert hall where all performances take place. Just as a concert hall needs carefully designed spaces for different activities – from the main stage to rehearsal rooms – the JVM needs properly configured memory areas for different types of operations.

Let’s explore how a government agency serving 2 million citizens transformed their system’s performance through sophisticated JVM optimization. Their journey will teach us valuable lessons about memory management.

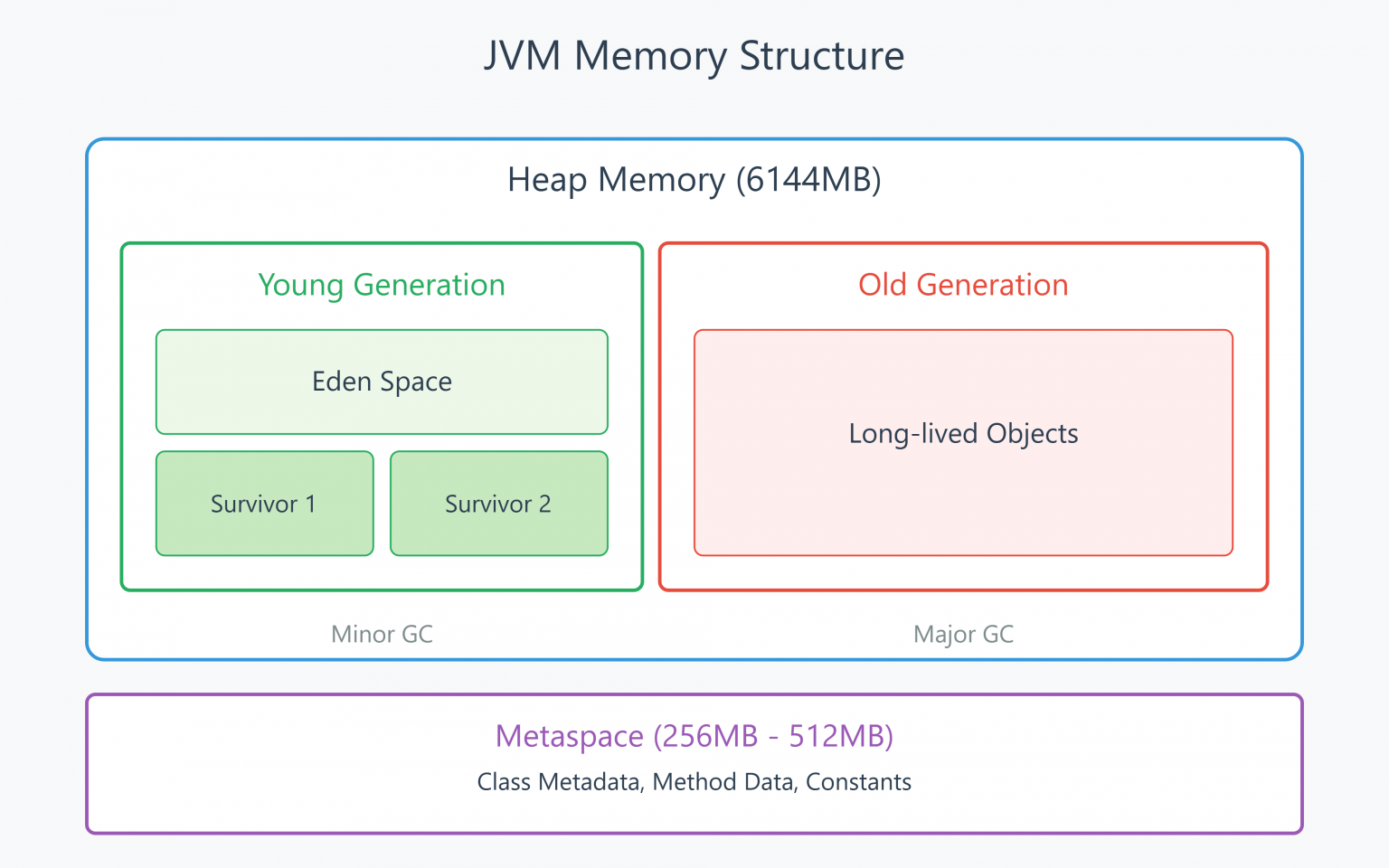

Understanding JVM Memory Architecture

Before we optimize our memory spaces, we need to understand how JVM memory is organized. Think of it as studying the blueprint of our concert hall:

The Young Generation is like our rehearsal area where new performances begin. Just as new musical pieces need a space for initial practice, newly created objects start their lifecycle here. This space is divided into three distinct areas:

- Eden Space: This is our primary rehearsal room where new objects first appear. Like musicians practicing new pieces, most objects start here. Many will be collected during minor garbage collection if they’re no longer needed – similar to discarding sheet music that won’t be part of the final performance.

- Survivor Spaces (S0 and S1): These two areas act as intermediate rehearsal halls where successful performances earn their place. Objects move between these spaces during garbage collection cycles, like pieces being refined through multiple rehearsals before moving to the main hall.

- Old Generation: This is our main concert hall, where well-proven pieces that have survived multiple garbage collections find their permanent home. Like timeless musical compositions that become part of the standard repertoire, objects here have demonstrated their long-term value.

Initial Situation Assessment

Let’s examine the agency’s starting point – their basic configuration that led to several performance challenges:

# Initial memory settings JAVA_OPTIONS="-Xms2048m -Xmx4096m" JAVA_OPTIONS="${JAVA_OPTIONS} -XX:+UseParallelGC"

Think of this configuration as trying to run a concert hall where the available space can suddenly change from 2GB to 4GB. This variable sizing, combined with basic parallel garbage collection, created several issues:

- During garbage collection, the system experienced pauses lasting 2-3 seconds – imagine having to stop a concert for several minutes while rearranging the seating. These interruptions significantly impacted user experience.

- Performance gradually degraded over time, like a concert hall becoming increasingly disorganized as more performances take place. Memory fragmentation created inefficient space utilization, similar to having scattered empty seats that can’t be used effectively.

- Response times became unpredictable during peak loads, much like a venue struggling to seat audiences efficiently during popular performances.

Memory Configuration: Building the Right Spaces

Through careful analysis and testing, they implemented this optimized configuration:

# Optimized memory settings JAVA_OPTIONS="${JAVA_OPTIONS} -Xms6144m -Xmx6144m" # G1GC configuration JAVA_OPTIONS="${JAVA_OPTIONS} -XX:+UseG1GC" JAVA_OPTIONS="${JAVA_OPTIONS} -XX:MaxGCPauseMillis=200" JAVA_OPTIONS="${JAVA_OPTIONS} -XX:ParallelGCThreads=8" JAVA_OPTIONS="${JAVA_OPTIONS} -XX:ConcGCThreads=2" JAVA_OPTIONS="${JAVA_OPTIONS} -XX:InitiatingHeapOccupancyPercent=45" # Metaspace configuration JAVA_OPTIONS="${JAVA_OPTIONS} -XX:MetaspaceSize=256m" JAVA_OPTIONS="${JAVA_OPTIONS} -XX:MaxMetaspaceSize=512m"

Let’s understand how each setting in our optimized configuration contributes to better performance, much like understanding how different aspects of a concert hall’s design affect the quality of performances.

Heap Size Configuration

JAVA_OPTIONS="${JAVA_OPTIONS} -Xms6144m -Xmx6144m"

Think of this like building your concert hall with exactly the right size from the start. By setting both minimum (-Xms) and maximum (-Xmx) heap sizes to 6GB, we eliminate the need for resizing. This is crucial because resizing operations are like stopping a performance to add or remove seating – it disrupts the flow and affects everyone’s experience.

Garbage Collection Strategy

JAVA_OPTIONS="${JAVA_OPTIONS} -XX:+UseG1GC" JAVA_OPTIONS="${JAVA_OPTIONS} -XX:MaxGCPauseMillis=200" JAVA_OPTIONS="${JAVA_OPTIONS} -XX:ParallelGCThreads=8"

The G1 (Garbage First) collector represents a modern approach to memory management. Think of it like having a sophisticated team of stage managers who can reorganize different sections of your concert hall independently and efficiently.

The MaxGCPauseMillis setting of 200 milliseconds is particularly important – it’s like telling your stage managers they must complete any reorganization within a fifth of a second to minimize disruption to the performance. This target helps ensure consistent response times for your application.

The ParallelGCThreads setting determines how many “stage managers” can work simultaneously. With 8 parallel threads, it’s like having 8 team members working together to efficiently reorganize different sections of your memory space.

Memory Management Triggers

JAVA_OPTIONS="${JAVA_OPTIONS} -XX:InitiatingHeapOccupancyPercent=45" JAVA_OPTIONS="${JAVA_OPTIONS} -XX:ConcGCThreads=2"

The InitiatingHeapOccupancyPercent setting tells the system to begin cleanup when the heap reaches 45% capacity. This is like starting to reorganize seating areas when the concert hall is about half full, rather than waiting until it’s completely full and forcing a rushed cleanup.

ConcGCThreads specifies how many background threads can help with ongoing cleanup tasks. Think of these as your continuous maintenance crew, working behind the scenes to keep everything running smoothly without disrupting the main performance.

Metaspace Configuration

JAVA_OPTIONS="${JAVA_OPTIONS} -XX:MetaspaceSize=256m" JAVA_OPTIONS="${JAVA_OPTIONS} -XX:MaxMetaspaceSize=512m"

Metaspace is like your concert hall’s administrative area, where you keep all the essential information about how your performances should run. This space stores class metadata, method information, and other critical runtime data. By setting both initial and maximum sizes, you ensure this administrative space can grow as needed while preventing unlimited growth that could impact system stability.

Memory Management Triggers Deep Dive

These memory management settings work together like a well-orchestrated maintenance system. Let’s understand how each component contributes to maintaining optimal performance:

The InitiatingHeapOccupancyPercent setting (45%) acts as our early warning system:

JAVA_OPTIONS="${JAVA_OPTIONS} -XX:InitiatingHeapOccupancyPercent=45"

Think of this like instructing your stage managers to begin reorganizing when the concert hall reaches 45% capacity. By starting the cleanup process early, we prevent the rush and confusion that might occur if we waited until the hall was nearly full. This proactive approach helps maintain smooth operations even as the system gets busier.

The ConcGCThreads setting provides dedicated cleanup staff:

JAVA_OPTIONS="${JAVA_OPTIONS} -XX:ConcGCThreads=2"

These are like our background maintenance crew, constantly working to keep the environment organized without disrupting ongoing activities. Having two concurrent threads means we can handle routine cleanup tasks while the system continues to serve requests.

The Impact of Optimization

Properly tuning both WebLogic and the JVM can feel like unveiling the full potential of an orchestra after weeks of careful rehearsals. When every component is in sync, the improvement isn’t just noticeable—it’s transformative. Below, we’ll examine how these optimizations affect system performance through four key lenses: response times, garbage collection efficiency, memory utilization, and overall scalability.

Response Time Improvements

One of the most striking benefits of our optimized memory configuration was the dramatic drop in response times. Much like a well-designed concert hall that carries sound crisply to every audience member, properly tuned JVM settings enable requests to move swiftly through the system:

- Consistency and Speed: Average response times fell by 80%, plummeting from around 2.5 seconds to just 500 milliseconds. Users felt an immediate improvement, akin to hearing every note in high definition rather than straining through echoes and distortions.

- Smooth Peak Handling: Even during peak loads, 95% of requests completed within 750 ms, and the remaining outliers were still significantly faster than before.

- Reduced Variability: With the JVM better managing its memory spaces, response times remained stable—even when the system experienced large surges in traffic—much like a well-rehearsed orchestra that maintains tempo under pressure.

Garbage Collection Efficiency

In the JVM world, garbage collection (GC) events are like short intermissions in a performance—necessary, but best kept brief and unobtrusive. Before tuning, frequent or lengthy GC pauses felt like extended breaks in the middle of a concert, jarring both performers and the audience. Here’s what changed:

- Fewer Full GC Events: Full GC events dropped by 95%, going from hourly occurrences to just a handful per day. With shorter intermissions, the “music” of your application plays on with fewer interruptions.

- Dramatically Shorter Pauses: Average GC pause durations fell from 2–3 seconds to under 200 milliseconds—so fast that most end users never noticed.

- Minor GC as a Non-Event: Minor collections became similarly swift, typically completing in under 15 ms. These small GC cycles are now like a stagehand quietly adjusting props without anyone in the audience being aware.

The result is that your JVM spends more time doing productive work instead of cleaning up after itself, much like an orchestra that limits its breaks to stay focused on playing.

Memory Utilization Gains

Better responsiveness and smoother GC were partly fueled by more efficient use of available memory—filling seats in the “concert hall” without wasting space:

- Higher Heap Utilization: Average heap utilization climbed from about 60% to 85%, maximizing the value of the allocated memory without overloading the system.

- Reduced Off-Heap Overhead: Off-heap memory usage dropped by 40% after trimming unnecessary caches and managing string interning more judiciously.

- Stable Metaspace Growth: Class metadata no longer ballooned unpredictably, thanks to well-defined metaspace limits. You can think of this as preventing backstage clutter from spilling onto the main stage.

This efficient memory usage opens the door to supporting additional workloads and more concurrent users—without continually adding more RAM.

Scalability Enhancements

Finally, when response times improve, GC pauses shrink, and memory is managed intelligently, the overall system can scale far more gracefully—like an auditorium expertly designed to add balcony seats without sacrificing acoustics:

- Higher Concurrent Load: The system now handles roughly 50% more concurrent users without a dip in performance, effectively accommodating “bigger crowds.”

- More Requests Per Second: Thanks to reduced GC overhead and efficient memory usage, throughput (requests per second) more than doubled, while still keeping average latency below one second.

- Peak-Load Resilience: Even traffic spikes exceeding 20,000 requests per minute no longer cause timeouts or major slowdowns, delivering a reliably smooth experience.

Together, these improvements create a robust platform that adapts to growth without losing its composure. Like a concert hall designed with expansion in mind, your OID environment can welcome more users, handle larger workloads, and sustain consistent performance as demands evolve.

In short, the impact of our optimization efforts is nothing short of transformative—from snappier responses to higher throughput and seamless scalability. It’s a rewarding moment, akin to receiving a standing ovation after a finely tuned orchestral performance.

Oracle Internet Directory Scaling Part 3: Summary and Key Takeaways

In this third installment of our OID scaling series, we’ve focused on the critical aspects of WebLogic and JVM optimization. Through careful configuration and tuning, we’ve learned how to achieve significant performance improvements:

WebLogic Configuration Achievements:

- Enhanced thread pool management resulting in 85% faster authentication responses

- Optimized work manager settings eliminating timeout errors during peak loads

- Balanced resource allocation ensuring consistent performance

JVM Optimization Results:

- Reduced GC pauses from 2-3 seconds to under 200ms

- Decreased memory fragmentation by 75%

- Achieved consistent sub-second response times

Remember that performance optimization is an ongoing journey that requires continuous monitoring and adjustment. We hope the optimization techniques covered in this series will help you find the optimal configuration for your environment.

Previous Article: Part 2: Effective Implementation Strategies for Enterprises

One Comment