Oracle Internet Directory (OID) Scaling Part 2: Effective Implementation Strategies for Enterprises

This article is Part 2 of the “Oracle Internet Directory Scaling” series.

The previous entry, Part 1: Fundamentals & Core Architecture, covers fundamentals of Oracle Internet Directory enterprise scaling. If you haven’t read it yet, be sure to check it out first.

Scaling Oracle Internet Directory (OID) efficiently is crucial for enterprise success. This guide focuses on implementing OID enterprise scaling strategies, including memory optimization, connection management, and best practices for single-server and multi-server architectures. Follow these proven techniques to achieve performance gains and meet business demands.

For more advanced or complex scenarios, including deep WebLogic/JVM tuning and advanced troubleshooting, see Advanced Optimization Techniques and Troubleshooting.

Table of Contents

ToggleMemory Optimization: Key Techniques for Enterprise Performance

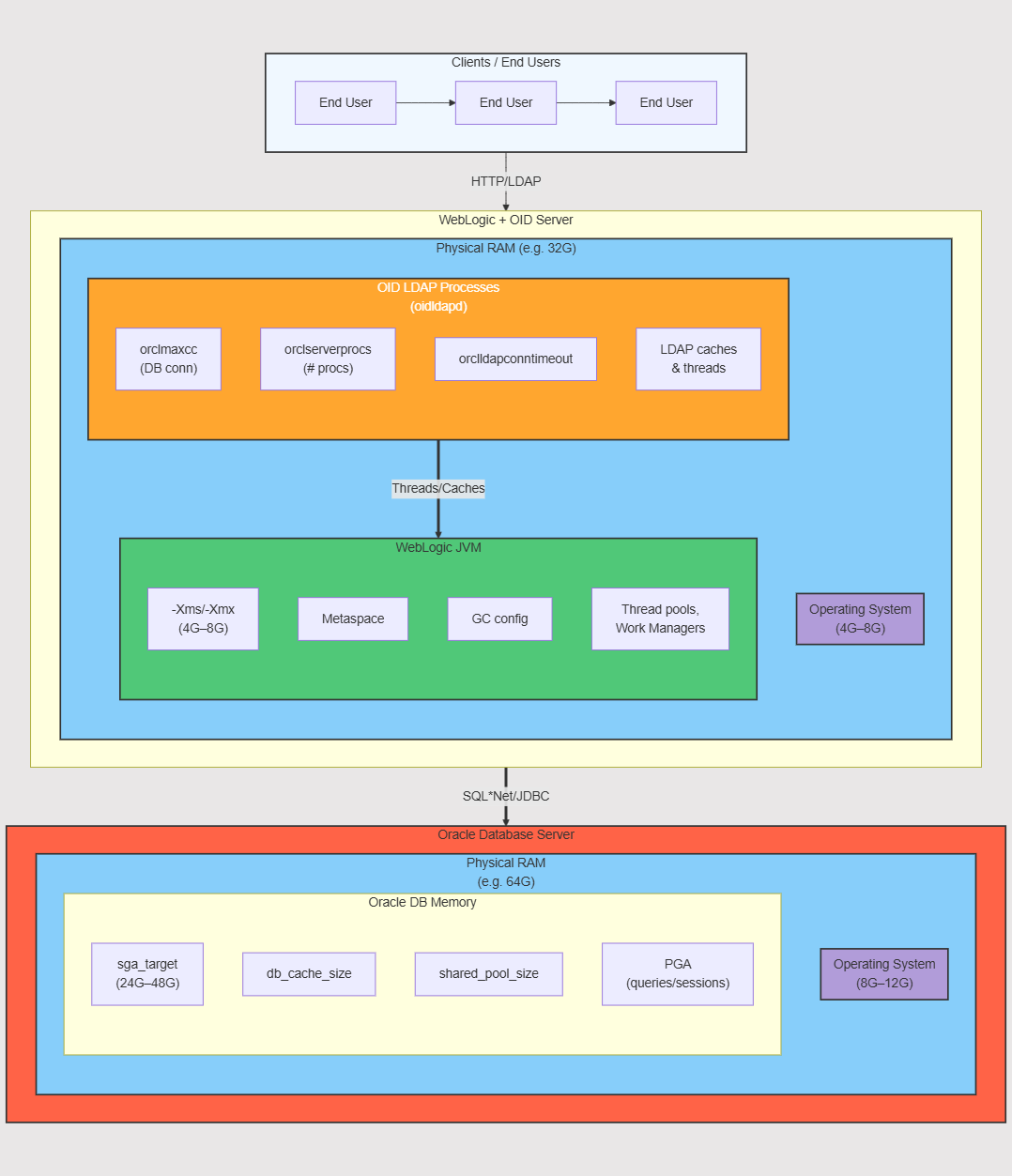

Memory configuration is like designing the infrastructure of a growing city—careful planning prevents congestion and ensures smooth operation. In OID environments, memory usage spans the database layer, the OID/LDAP processes, and the application layer (WebLogic JVM).

SGA Configuration and Tuning (Database Layer)

The System Global Area (SGA) is central to your Oracle Database performance. Below is an example of how you might tune the SGA on a single-server setup where the database and OID run on the same machine:

-- These settings form the foundation of your memory architecture ALTER SYSTEM SET sga_target = '24G' SCOPE=BOTH; ALTER SYSTEM SET sga_max_size = '24G' SCOPE=BOTH; -- Buffer Cache Configuration -- Allocate approximately 2/3 of SGA for buffer cache ALTER SYSTEM SET db_cache_size = '16G' SCOPE=BOTH; -- Shared Pool Configuration -- Start with 4GB, adjust based on workload ALTER SYSTEM SET shared_pool_size = '4G' SCOPE=BOTH;

- 75% Rule of Thumb: Reserving ~75% of total RAM for Oracle ensures the OS and any additional processes have room to operate.

- Balanced Distribution:

- 16GB to buffer cache for commonly accessed data.

- 4GB to shared pool for SQL statements, PL/SQL code, and metadata.

Cross-Layer Memory Balancing

In production, many organizations run OID on a separate server from the database. If that is the case, you can generally allocate more memory to the Oracle Database (on the DB server) without worrying about competition from OID or WebLogic on that same box. Conversely, on the OID/LDAP server, you might not need a large SGA—but you do need enough memory for the JVM heap, OS overhead, and so on.

When single-server: Your SGA, PGA, WebLogic heap, OS memory, and LDAP processes all contend for the same resource pool. Over-allocating to the database can trigger OS swapping. Monitoring (e.g., AWR, OEM, GC logs) is crucial to find a stable balance.

Real-world Case Study: Financial Services Implementation

Before optimization, let’s look at how to check your initial settings:

1. To check parameter settings:

-- Check initial memory parameter settings

SELECT name, value, display_value, description

FROM v$parameter

WHERE name IN (

'sga_target',

'db_cache_size',

'shared_pool_size'

); 2. Then check actual component sizes:

-- Monitor actual memory allocation of SGA components

SELECT component,

ROUND(current_size/1024/1024/1024, 2) as current_size_GB,

ROUND(min_size/1024/1024/1024, 2) as min_size_GB,

ROUND(max_size/1024/1024/1024, 2) as max_size_GB

FROM v$sga_dynamic_components

WHERE current_size > 0; A financial services company was experiencing performance issues in their OID environment, handling 50,000 user authentications per hour. Their initial configuration looked like this:

-- Initial Configuration

sga_target = 8G

db_cache_size = 4G

shared_pool_size = 1G

They were experiencing:

- High disk I/O rates

- Increased response times during peak hours

- Frequent session timeouts

They decided to increase the overall SGA size to 24GB on a 32GB server, applying the buffer cache and shared pool distribution principles:

Optimized Configuration

-- Optimized Configuration

sga_target = 24G

db_cache_size = 16G

shared_pool_size = 4G

To confirm the effects, they monitored key performance indicators in real time:

-- Monitor key performance indicators in real-time

-- Physical Read: Rate of disk I/O requests

-- Response Time: Transaction processing speed

-- Session Count: Number of active sessions

SELECT metric_name, value, metric_unit

FROM v$sysmetric_history

WHERE group_id = 2

AND metric_name IN (

'Physical Read Total IO Requests Per Sec',

'Response Time Per Txn',

'Session Count'

); Key Takeaway: Scaling SGA (while monitoring OS memory headroom) led to dramatic performance gains. In separate-server environments, you would simply tune the database node’s memory as needed without competing with OID or WebLogic on the same host.

Connection Management: Ensuring Smooth Oracle OID Operations

Managing connections between Oracle Database, OID, and clients is essential for preventing bottlenecks. This section details connection pool adjustments, LDAP server configuration, and real-world implementation tips for enterprise use.

Database Connection Configuration

First, let’s check current connection parameters:

-- Check current connection and process parameters

SELECT name, value, display_value, description

FROM v$parameter

WHERE name IN (

'processes',

'sessions',

'transactions'

); Then set optimized values:

-- Set connection parameters for optimal performance ALTER SYSTEM SET processes = 500 SCOPE=BOTH; -- Base process limit ALTER SYSTEM SET sessions = 750 SCOPE=BOTH; -- 1.5x processes ALTER SYSTEM SET transactions = 825 SCOPE=BOTH; -- 1.1x sessions

Understanding these parameters:

- Typically supports 300–400 concurrent user sessions

- Includes headroom for background processes (50–100)

- Maintains system stability while preventing resource exhaustion

- ~1.5× the processes limit

- Accommodates multiple sessions per user

- Provides buffer for background operations

- Slightly higher than sessions

- Ensures smooth handling of concurrent operations

- Prevents transaction bottlenecks during peak usage

Tip: Ensure that your WebLogic thread configuration also aligns with these values. If you only have 50 total threads in WebLogic but raise sessions to 750, the database can handle more connections than your middleware can effectively utilize. This mismatch can cause either underutilized DB capacity or stuck threads on the application side.

LDAP Server Connection Management

The LDAP layer requires its own connection configuration to work harmoniously with the database layer. Typically, each LDAP server process can handle several concurrent DB connections, but you must ensure it stays within the limits set on the database side.

Configure LDAP layer connections to match database capacity:

-- Check current LDAP connection settings dn: cn=oid1,cn=osdldapd,cn=subconfigsubentry changetype: modify replace: orclmaxcc orclmaxcc: 10 - replace: orclserverprocs orclserverprocs: 8 - replace: orclldapconntimeout orclldapconntimeout: 60

Monitor connection utilization:

-- Monitor active connections and usage patterns ldapsearch -h -p -D cn=orcladmin -w -b \ "cn=client connections,cn=monitor" "(objectclass=*)" \ orclmaxconnections orclcurrentconnections

Key Configuration Components:

- Maximum Concurrent Connections (orclmaxcc = 10)

Each LDAP server process can handle 10 concurrent DB connections, balancing resource utilization with connection availability - Server Processes (orclserverprocs = 8)

Often matched to CPU core count for optimal resource usage - Connection Timeout (orclldapconntimeout = 60)

One-minute timeout for inactive connections prevents idle sessions from accumulating

Real-world Case Study: Retail Portal

A large retail organization experienced severe connection timeouts during their holiday season. Their initial configuration was:

Initial State:

-- Check initial connection configuration

SELECT resource_name, current_utilization, max_utilization,

ROUND(current_utilization/max_utilization * 100, 2) as usage_percentage

FROM v$resource_limit

WHERE resource_name IN ('processes', 'sessions');

processes = 200

sessions = 300

orclmaxcc = 5

orclserverprocs = 4

Challenges Faced:

- Connection timeouts during peak shopping hours

- Growing queue of pending authentication requests

- Increased customer complaints about access issues

Optimized Configuration:

-- Database connection settings ALTER SYSTEM SET processes = 500 SCOPE=BOTH; ALTER SYSTEM SET sessions = 750 SCOPE=BOTH;

-- LDAP server settings dn: cn=oid1,cn=osdldapd,cn=subconfigsubentry changetype: modify replace: orclmaxcc orclmaxcc: 10 - replace: orclserverprocs orclserverprocs: 8

Implementation Results:

- Connection timeouts reduced by 95%

- Authentication queue times dropped from minutes to seconds

- System remained stable even at 3x normal load

Key Takeaway: Harmonizing DB connection limits with LDAP settings alleviated peak-load failures. In a multi-server architecture, keep an eye on network latency; if OID is on a different host from the DB, slow or saturated network links can mimic connection pool saturation.

Implementing Scaling Strategies: Step-by-Step Rollout

After you’ve identified potential bottlenecks and established baseline metrics (CPU, memory, sessions, etc.), you can implement improvements in a structured way. Below is a high-level approach.

Initial System Analysis

Before making any major changes, gather and analyze your current state:

Memory Usage:

- Run

free -mon Unix/Linux or equivalent OS commands to see if you’re close to exhausting RAM. - In single-server setups, confirm that the DB SGA, WebLogic (JVM), and OS are not fighting for memory.

- In multi-server setups, check each node separately (DB node vs. OID/WebLogic node).

# Memory pressure was evident in monitoring

$ free -m

total used free shared buff/cache available

Mem: 32000 24000 1000 1000 6000 7000 CPU Load:

- Check average CPU usage (

top,vmstat, or OS monitoring tools). - If usage regularly exceeds 80–85%, you may need more cores or more efficient configuration.

- Check average CPU usage (

# CPU showing signs of strain $ top %Cpu(s): 85.2 us, 10.3 sy, 0.0 ni, 4.1 id, 0.0 wa, 0.0 hi, 0.4 si

Database Processes:

- Query

v$resource_limitto see ifprocessesorsessionsare near the maximum. - Evaluate peak concurrency times (e.g., morning login spikes).

-- Database connections near limit SELECT resource_name, current_utilization, max_utilization FROM v$resource_limit WHERE resource_name = 'processes'; RESOURCE_NAME CURRENT_UTILIZATION MAX_UTILIZATION processes 450 500

IOD/LDAP Logs:

Check for repeated timeout errors, slow queries, or warnings about connection saturation.

Collecting baseline metrics (AWR or ASH reports on the database side, plus logs/metrics on the OID/LDAP side) helps you compare results before and after each change.

Rolling Out Changes in Stages

Implement improvements gradually, so you can isolate effects and minimize risk:

1. Memory Optimization

- Increase SGA in moderate steps (e.g., from 8G → 16G → 24G) rather than jumping straight to 32G or 48G.

- On a single-server deployment, ensure enough room for OS processes, WebLogic JVM, and other services. In a multi-server architecture, your DB server can focus on the SGA, while the OID server primarily handles LDAP processes and JVM.

-- Increased SGA for growing user base ALTER SYSTEM SET sga_target = '48G' SCOPE=SPFILE; ALTER SYSTEM SET db_cache_size = '32G' SCOPE=SPFILE;

2. Connection Pool Adjustment

- Raise

processes,sessions, andtransactionson the DB side if you see high session utilization. - Adjust

orclmaxcc,orclserverprocson the LDAP side accordingly. - Align these changes with WebLogic thread pool sizes (so you aren’t over-provisioning one layer).

-- Scaled up connection capacity ALTER SYSTEM SET processes = 750 SCOPE=SPFILE; ALTER SYSTEM SET sessions = 1125 SCOPE=SPFILE;

3. Monitor, Validate, and Repeat

- Re-check CPU, memory usage, disk I/O patterns, and response times.

- If new bottlenecks appear (e.g., thread starvation in WebLogic, GC pauses in the JVM), investigate further in Advanced Optimization Techniques.

- Keep an eye on long-running or idle connections—especially in a distributed setup, where network latency can lead to incomplete session cleanup.

Important: If you require more advanced WebLogic or JVM tuning—such as G1GC parameters, Work Manager policies, or diagnosing memory leaks—refer to the advanced section for deeper guidance.

Key Lessons from Real-World Use Cases

By systematically adjusting memory usage, connection limits, and OID/DB configurations, you can often achieve:

- Over 45% improvement in authentication or search response times

- Drastically fewer timeouts or errors during peak loads

- Stable performance even under 4× user growth or higher concurrency

Key Lessons:

1. Coordinated Changes

- DB parameters, LDAP config, and WebLogic threads all interact. Tuning one layer while ignoring others can shift the bottleneck.

2. Ongoing Monitoring

- Always re-check CPU, memory, session usage, and logs after each change.

- Continuous tracking helps confirm improvements and detect regressions early.

3. Phased Approach

- Gradual rollouts let you isolate variables (e.g., memory changes vs. session increases) and reduce the risk of introducing multiple changes at once.

- Validate performance after each phase using AWR for the DB,

ldapsearchmonitoring for OID, and OS-level checks for CPU and memory.

Considerations for Separate Server Architectures

In most production environments, the Oracle Database and OID (including WebLogic) are deployed on separate servers or clusters. This approach provides:

1. Clearer Resource Isolation

- An OID/LDAP server focuses on WebLogic threads, JVM heap, and LDAP processes.

- A DB server dedicates resources to the SGA, PGA, and database background processes.

2. Independent Scaling

- You can scale the OID/LDAP server’s CPU or memory independently from the DB server.

- The DB side may also scale (e.g., adding more RAM, using Oracle RAC) without impacting the OID node.

3. Network and Latency

- Ensure low latency and sufficient bandwidth between OID and DB, especially with large query volumes or spikes in authentication requests.

- Monitor DB sessions usage to verify that OID traffic doesn’t saturate the DB server or the network link.

4. Single- vs. Multi-Server

- Single-server: Must carefully balance OS + DB + OID memory on one system.

- Multi-server: Gains isolation but must watch inter-node traffic and potentially higher licensing or infrastructure costs.

Regardless of architecture, the fundamental tuning principles—database memory sizing, robust connection management, and coordinated WebLogic/JVM settings—remain the same. Monitoring cross-host performance is especially vital in distributed setups.

Quick Parameter Reference Table

Below is a summary of key parameters and recommended starting values. Real configurations will vary based on concurrency levels, available hardware, and your actual usage patterns.

| Layer | Key Parameters | Typical Starting Point | Notes |

|---|---|---|---|

| Database | processes, sessions, transactions | processes=500, sessions=750, transactions=825 | Scale with concurrency. 1.5× processes for sessions, 1.1× sessions for transactions. |

| sga_target, db_cache_size, shared_pool_size | sga_target=24G, db_cache=16G, shared_pool=4G (on 32GB server) | Adjust if DB is on a separate server. On single-server, watch OS + WebLogic memory usage. | |

| LDAP (OID) | orclmaxcc, orclserverprocs, orclldapconntimeout | orclmaxcc=10, orclserverprocs=8, conntimeout=60 | Match CPU cores for orclserverprocs. Keep DB concurrency in mind (processes/sessions). |

| WebLogic | Thread Pool min/max, queue-size | min=50, max=400, queue-size=-1 | Enough threads for peak load; queue-size=-1 handles spikes but requires vigilant monitoring. |

| Work Manager constraints | e.g., | Use fair-share or priority classes for critical operations. | |

| JVM | -Xms, -Xmx, -XX:+UseG1GC, -XX:MaxGCPauseMillis, etc. | -Xms=4G, -Xmx=8G, Pause=200ms (G1GC) | Tweak based on heap usage and GC logs. Metaspace ~256–512MB typical for OID. |

Tip: Begin with conservative allocations. Expand gradually as you collect metrics (AWR, OEM, OS logs). The best practice is to observe real-world load, then increment parameters.

Advanced Monitoring and Next Steps

Even a perfectly tuned system can degrade over time as workloads change or new features launch. A preventive monitoring strategy ensures you catch warning signs early:

1. Daily Health Checks

- CPU load, memory utilization (

free -m, OS counters). - DB sessions usage (e.g.,

v$resource_limit). - WebLogic thread pool status (using WLST or console)

- CPU load, memory utilization (

2. Regular Performance Snapshots

- AWR/ASH for DB performance and wait events.

- OEM dashboards or custom scripts for trending CPU, memory, and concurrency patterns.

ldapsearchqueries to check active connections on the OID side.

3. Alerting Mechanisms

- Trigger alerts if GC pause times exceed 200ms or if sessions approach 80% capacity.

- Consider integration with enterprise monitoring tools (e.g., Prometheus, Grafana, Splunk).

If you need more sophisticated optimizations—for instance, advanced WebLogic Work Manager policies, G1GC tuning under extreme loads, or diagnosing complex resource contention—refer to Advanced Optimization Techniques and Troubleshooting. That section covers:

- Detailed Work Manager examples and thread diagnostics

- Fine-grained JVM GC settings (e.g., G1 region sizing, Metaspace management)

- Real-world concurrency and memory leak scenarios

Summary of Implementation Strategies

1. Plan Your Memory Footprint

- Decide if DB and OID are on the same or separate servers.

- Allocate ~75% of total RAM to the database (on single-server) or follow best practices if the DB is isolated.

2. Configure Connections Carefully

- Align DB

processes/sessionswith OID’sorclmaxccand WebLogic’s concurrency model. - In multi-server setups, confirm adequate network capacity and low latency.

3. Monitor, Validate, Iterate

- Compare baseline vs. post-change metrics: CPU, memory, I/O, wait events, GC logs.

- Revisit these steps as user growth or new applications emerge.

By applying these strategies—methodical memory allocation, properly scaled connection pools, coordinated WebLogic/JVM settings, and robust monitoring—you will have an OID environment that scales smoothly and delivers stable, reliable performance under evolving workloads.

Moving to Advanced Topics

For WebLogic thread tuning, G1GC fine-tuning, or advanced troubleshooting, check out Part 3 of this guide.

One Comment