Mastering Oracle OID Performance: A Comprehensive Guide to Optimization, Troubleshooting, and Best Practices

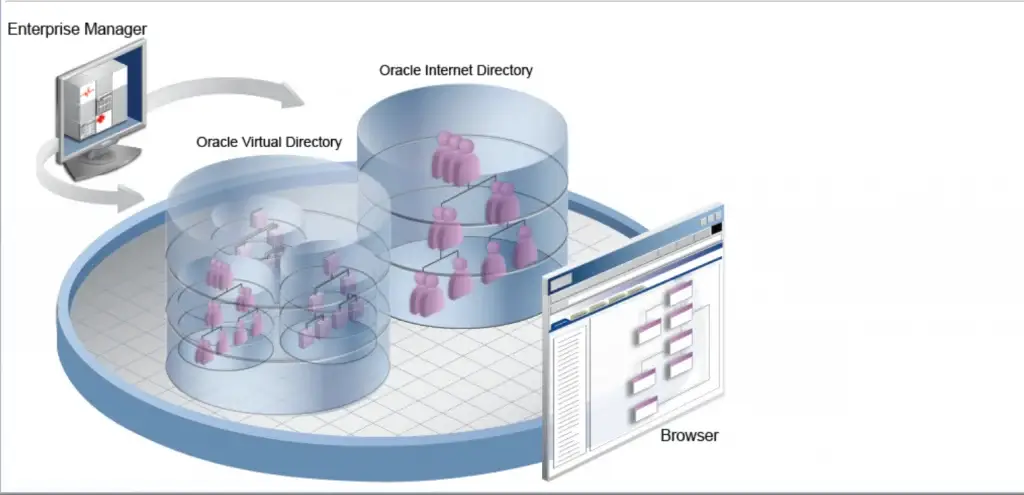

When it comes to managing Oracle Internet Directory (OID) in a production environment, performance optimization is not just about tweaking a few settings – it’s about understanding how different components work together and how to fine-tune each one for optimal performance. Let’s dive deep into the world of OID optimization, exploring each aspect in detail.

Page Contents

ToggleUnderstanding System Resource Optimization

Think of system resource optimization as building a well-balanced machine where each component needs to work in harmony with others. Let’s explore how to achieve this balance.

Memory: The Foundation of Performance

Memory management in OID is like managing a city’s infrastructure – you need to allocate resources wisely across different districts. In our case, we need to consider three main areas:

First, the OID Process itself requires a minimum of 4GB of memory. This might seem like a lot, but consider what this memory is used for: it maintains the LDAP cache (think of it as a quick-access storage for frequently requested information) and handles indexing operations (imagine it as the process of organizing a massive library catalog for quick searches). We control this through the orclmaxcc parameter, which we’ll configure later.

The WebLogic Server, our second memory consumer, needs at least 2GB. This memory handles the admin console and Oracle Directory Services Manager (ODSM) operations. Think of this as the control room where administrators manage the entire system. We’ll configure this through JVM settings in setDomainEnv.sh.

Finally, we need to reserve 2GB for the Operating System itself. This might seem generous, but remember that the OS handles file caching and system processes that keep everything running smoothly.

For production environments handling heavy loads, here’s a practical way to calculate your memory needs:

Start with a base of 16GB, then add:

-

- 2GB for every million directory entries (think of each entry as a card in a massive filing system)

- 2GB for each major application that will be accessing the directory

Let’s implement these memory settings. For the WebLogic Server, we’ll create a configuration file that sets up our memory parameters:

#!/bin/bash

if [ "${SERVER_NAME}" = "AdminServer" ] ; then

# We start with heap size configuration

USER_MEM_ARGS="-Xms2048m -Xmx2048m"

# Configure metaspace - this is where class metadata lives

USER_MEM_ARGS="${USER_MEM_ARGS} -XX:MetaspaceSize=256m -XX:MaxMetaspaceSize=512m"

# Set up garbage collection for optimal performance

USER_MEM_ARGS="${USER_MEM_ARGS} -XX:+UseG1GC"

USER_MEM_ARGS="${USER_MEM_ARGS} -XX:MaxGCPauseMillis=200"

USER_MEM_ARGS="${USER_MEM_ARGS} -XX:ParallelGCThreads=4"

export USER_MEM_ARGS

fi

This configuration deserves some explanation. The G1GC (Garbage First Garbage Collector) we’ve specified is like an efficient cleaning service – it helps manage memory by removing unused objects while minimizing system interruptions. The MaxGCPauseMillis setting tells this service not to take longer than 200 milliseconds for any single cleaning operation, helping maintain consistent performance.

CPU: The Engine of Your System

CPU configuration is like designing a highway system – you need enough lanes to handle peak traffic without overbuilding. For a production environment, here’s what you need to consider:

The minimum requirement is 4 CPU cores, allocated as follows:

-

- 2 cores dedicated to OID processes (these handle the main LDAP operations)

- 1 core for WebLogic (managing the administrative interface)

- 1 core reserved for OS operations (keeping the system running smoothly)

As your system grows, you’ll need to scale this up. A good rule of thumb is to add:

-

- 1 core for every 1000 concurrent connections

- 1 core for every 1 million directory entries

- Always keep 1 core reserved for OS operations

Let’s set up monitoring for our CPU usage. Here’s a script that helps track CPU utilization:

CPU_THRESHOLD=70

LOAD_THRESHOLD=$(nproc)

# Check CPU usage and alert if it exceeds our threshold

CPU_USAGE=$(top -bn1 | grep "Cpu(s)" | awk '{print $2}')

if [ $(echo "$CPU_USAGE > $CPU_THRESHOLD" | bc) -eq 1 ]; then

echo "ALERT: High CPU usage detected: ${CPU_USAGE}%" >> $LOG_FILE

fi

This script is like having a dashboard that monitors your system’s vital signs. It alerts you when CPU usage exceeds 70% – a level we’ve chosen because it gives us enough headroom to handle sudden spikes in activity while maintaining good performance.

Database Optimization: The Heart of OID

The database is where all your directory data lives, and its performance is crucial. Let’s configure it for optimal performance:

-- Configure memory parameters for the database

ALTER SYSTEM SET sga_target = 6G SCOPE=SPFILE;

ALTER SYSTEM SET pga_aggregate_target = 2G SCOPE=SPFILE;

ALTER SYSTEM SET db_cache_size = 2G SCOPE=SPFILE;Think of the SGA (System Global Area) as a shared workspace where all database processes can access common data. We recommend allocating about 60% of your available memory to the SGA in dedicated database servers. The PGA (Program Global Area) is like each process’s personal workspace – we recommend setting it to about 25% of your SGA size.

To ensure your database stays performant, regular maintenance is crucial. Here’s a script that helps maintain optimal performance:

-- Analyze OID schema statistics

EXEC DBMS_STATS.GATHER_SCHEMA_STATS('PREFIX_ODS',

estimate_percent => DBMS_STATS.AUTO_SAMPLE_SIZE,

method_opt => 'FOR ALL COLUMNS SIZE AUTO',

cascade => TRUE);This is like taking regular inventory of your data – it helps the database optimizer make better decisions about how to execute queries efficiently.

Understanding and Resolving Common Issues

Even with optimal configuration, you’ll occasionally face challenges with your OID environment. Let’s explore common issues and their solutions, understanding why they occur and how to address them effectively.

Connection Issues: The Gateway Problems

Think of connection issues like problems with the entrance to a building – if people can’t get in, nothing else matters. Here are the most common scenarios you’ll encounter:

When LDAP Clients Can't Connect

This is often like trying to enter a building but finding all the doors locked. The first step is to verify basic connectivity:

# Check if we can reach the server

ping <oid_server>

# Verify if the service is listening

netstat -tlnp | grep oidldapdIf these basic checks pass but problems persist, we need to look deeper. Think of it as checking not just if the door exists, but if it’s properly connected to the security system:

# Check OID process status

oidctl status

# Review the last few minutes of connection attempts in the logs

tail -f $ORACLE_HOME/ldap/log/oid*.log | grep "CONNECT"SSL/TLS Connection Failures

SSL/TLS issues are like having the right key to the door but finding it doesn’t quite fit. First, verify your certificates are valid:

# Check certificate validity

openssl x509 -text -noout -in <certificate_file>Think of this as examining the key under a microscope – we’re making sure it’s cut correctly and hasn’t expired.

Performance Issues: The Speed Bumps

Performance problems are like traffic jams – they slow everything down and frustrate users. Let’s look at how to diagnose and resolve them.

Slow LDAP Operations

When operations start running slowly, we need to look at several potential bottlenecks. Think of this like investigating why traffic has slowed down on a highway:

# Check system resource usage

top -b -n 1

# Monitor OID processes specifically

ps -eo pid,ppid,%cpu,%mem,cmd | grep oidldapdFor database performance, we need to check if there are any traffic jams in our data highway:

-- Check for database bottlenecks

SELECT event, total_waits, time_waited

FROM v$system_event

WHERE wait_class != 'Idle'

ORDER BY time_waited DESC;This query helps us understand where database operations are spending their time – think of it as identifying where the traffic jams are occurring.

Best Practices: Building a Robust Environment

Now that we understand how to optimize and troubleshoot our OID environment, let’s explore best practices that will help maintain peak performance and reliability over time.

Security: Building Strong Walls

Security in OID is like protecting a valuable vault – you need multiple layers of protection. Let’s implement these layers systematically:

Strong Authentication

First, let’s set up robust password policies:

ldapmodify -h <hostname> -p <port> -D cn=orcladmin -w <password> << EOF

dn: cn=pwdpolicy,cn=Common,cn=Products,cn=OracleContext

changetype: modify

replace: pwdMinLength

pwdMinLength: 12

-

replace: pwdCheckSyntax

pwdCheckSyntax: 1

EOFThink of this as setting standards for the keys to your vault – they need to be complex enough to resist tampering but usable enough for legitimate users.

Audit Trail: Keeping Track of Activities

Setting up proper auditing is like installing security cameras in your vault. Let’s configure it:

-- Review recent activities

SELECT operation_time, dn, requester_dn, operation

FROM ods.ods_audit_log

WHERE operation_time > SYSDATE - 1

ORDER BY operation_time DESC;This helps us maintain a clear record of who did what and when – essential for both security and compliance.

Proactive Monitoring: Staying Ahead of Problems

The key to maintaining a healthy OID environment is proactive monitoring. Let’s set up a comprehensive monitoring system:

#!/bin/bash

# monitor_oid_health.sh

# Monitor connection usage

MAX_CONNECTIONS=1000

current_connections=$(oidctl status | grep "Current Connections" | awk '{print $4}')

if [ "$current_connections" -gt "$MAX_CONNECTIONS" ]; then

echo "ALERT: Connection threshold exceeded: ${current_connections}/${MAX_CONNECTIONS}"

fi

# Check cache effectiveness

cache_stats=$(ldapsearch -h localhost -p 3060 -D cn=orcladmin -w <password> \

-b "cn=statistics,cn=monitor" "(objectclass=*)")

Think of this monitoring script as your system’s health monitoring system – it constantly checks vital signs and alerts you when something needs attention.

Maintenance Rhythm: Keeping the Engine Running Smooth

Just like any complex machinery, OID needs regular maintenance. Here’s how to structure your maintenance schedule:

Daily Care:

Think of these as daily health checks – quick but essential. Review your alert logs, check connection statistics, and monitor resource usage. This is like checking the oil level in your car – quick but crucial.

Weekly Maintenance:

These are your more thorough checkups. Gather schema statistics, analyze performance trends, and review security logs. This is similar to a weekly car wash and inspection – more thorough than daily checks but still routine.

Monthly Overhaul:

This is your comprehensive review – like taking your car in for scheduled maintenance. Conduct a full system performance review, update your capacity planning, and perform detailed security audits.

Conclusion

Managing Oracle Internet Directory is like maintaining a complex machine – it requires attention to detail, regular maintenance, and a good understanding of how all the parts work together. By following these optimization techniques, troubleshooting approaches, and best practices, you’ll be well-equipped to maintain a healthy and efficient OID environment.

Remember, these settings and procedures aren’t set-in-stone rules – they’re more like guidelines that you should adapt to your specific environment’s needs. Always test changes in a non-production environment first, and keep detailed records of what works best for your particular setup.

Looking Ahead: Your Next Steps in OID Management

As we’ve explored the fundamentals of OID optimization and troubleshooting in this guide, you might be wondering about the next challenge: scaling your OID environment as your organization grows. How do you know when it’s time to scale? What are the best approaches to growing your infrastructure?

I address these questions in detail in our follow-up guide: Mastering Oracle OID Scaling: A Field Engineer’s Guide to System Growth. This companion piece builds on the optimization principles we’ve discussed here and shows you how to effectively scale your optimized environment.

In that guide, we explore:

- Key indicators that signal when scaling is needed

- Proven strategies for handling growth in user base and directory size

- Real-world scenarios and solutions from enterprise environments

- Advanced configuration techniques for high-availability and performance at scale

The journey of OID management doesn’t end with optimization – it evolves as your organization grows. I encourage you to check out the scaling guide when you’re ready to take your OID environment to the next level.

Remember, a well-optimized foundation (as we’ve covered in this guide) is essential before considering any scaling initiatives. Make sure you’ve implemented the optimization strategies we’ve discussed here before moving on to scaling considerations.

Note: Always test these configurations in a development environment first, and adjust the parameters based on your specific needs and environment characteristics.